The Selenium library is available for in different languages, but for this project I’m using the Python version. It is mainly used for testing web applications.

Selenium is an open-source solution that can automate web browsers. Let’s start by looking at the different components I’m using in the automation script. While I use this as an example, your data source could be anything else of course. So I figured I could use a simple web scraping script to (1) login to the portal and (2) download the CSV file. Unfortunately, at this time, the portal does not provide an API and only allows me download a CSV export containing the list of certifications. The AWS partner portal gives me that overview, but I wanted to enrich this data with data from our HR system to see what the distribution of certifications across countries is for example. In my current role, as AWS Practice Lead for Nordcloud, I want to keep track of AWS certifications our employees have achieved. But what if this website doesn’t have an API? In this post, I’ll show you how to run Chrome and Selenium in Lambda to run a simple scraper. This type of code needs to run from a server for many practical reasons, but if you are in-doubt - Web scrapping is a borderline risky task that can get you banned from some sites, so you dont want to get your personal IP banned, user a virtual server for scrapping.Sometimes you need to get some data from your favorite website to use in your project. We are working with an Ubuntu Virtual Server 20.04 from Digital Ocean. # Pre-RequisitesĪll these steps assume that you are already inside of a virtual server. These expensive stress testing SaaS applications, that charge an arm and a leg typically use this type of coding at some point in the back-end to simulate website traffic. This type of coding is also generally used for performance testing, stress testing a website by simulating multiple instances of real-users visiting the site. Selenium uses a chrome browser and goes through the website like a normal person would, clicking on buttons and links. Traditional scrapping does not work with dynamic sites that load content on the fly.

Python and Selenium are really useful for scrapping JS based websites that load dynamically. This guide will show you how to set up an Ubuntu Virtual Private Server (VPS) for web scrapping with Selenium.

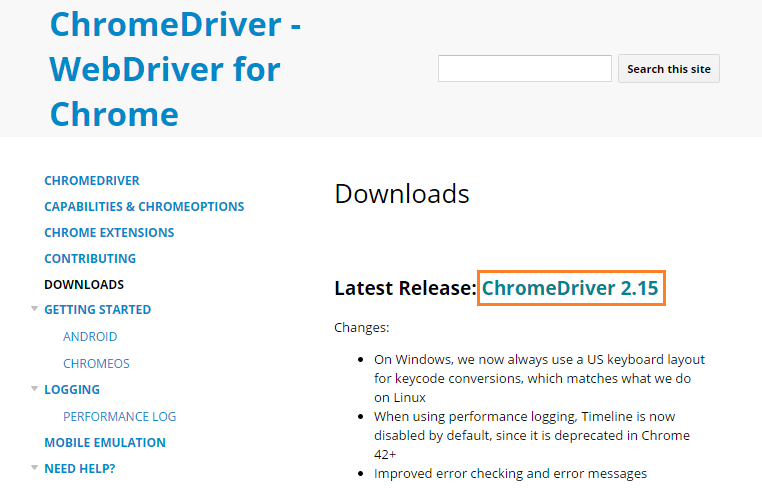

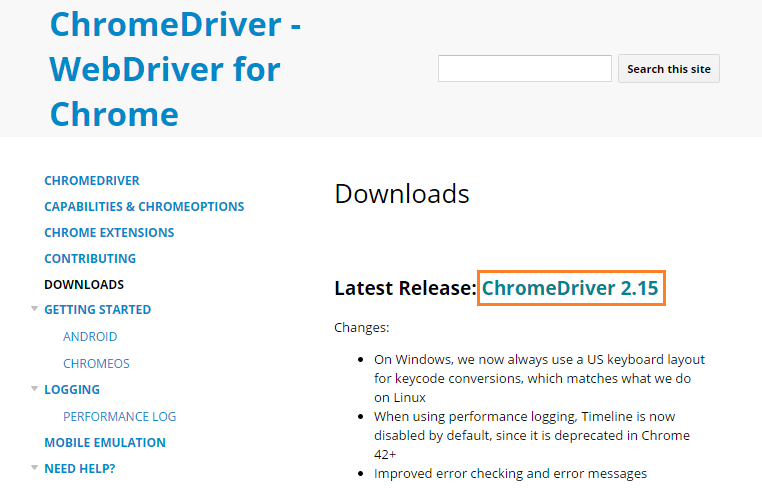

# Install Chrome Browser and Chromedriver Ubuntu 20.04

0 kommentar(er)

0 kommentar(er)